The "new" way to do networking with SDN

SDN applications, analysis and scaling

Research papers review and summary.

PART 1 - Here link to PART 2

In this post there is a practical step-by-step project with POX SDN, OpenFlow, Click and NFV.

Table of Contents

The arrival of SDN and OpenFlow

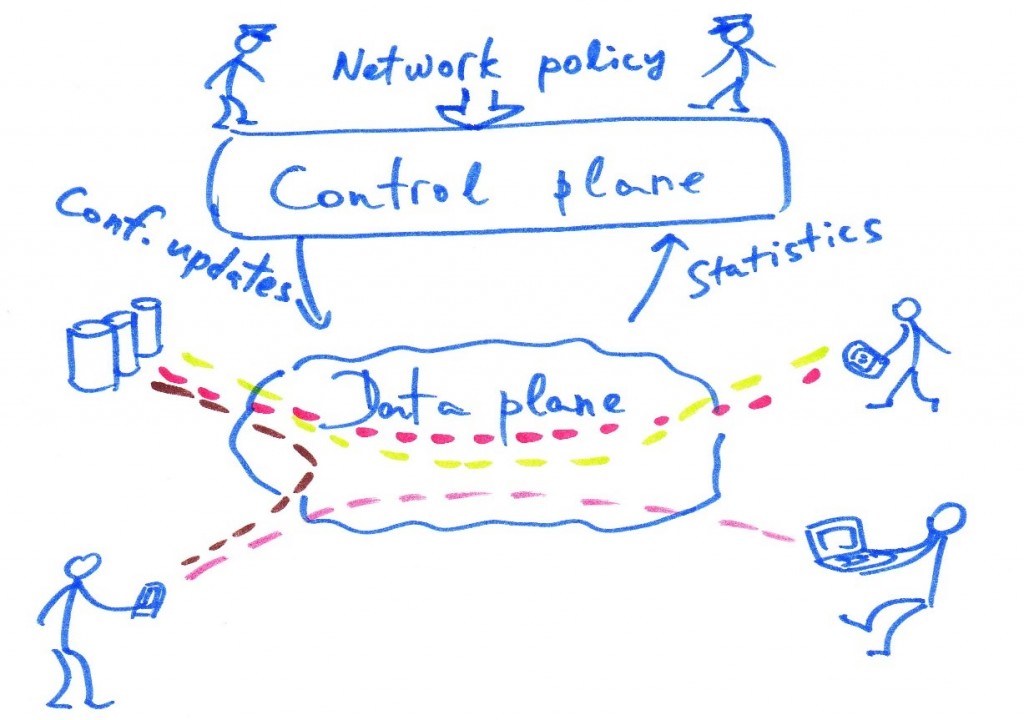

Improving networks protocols and deploying new features or applications is very hard on traditional networks. The time needed for designing to implementing changes is extremely long and costly. Today network functions can be virtualized and aggregated into one single machine acting as controller. The control plane on routers and switches is decoupled from the data plane and put into another device. Here the concept of SDNs comes with many pros: higher flexibility, better scaling, and ease of implementation and maintenance from developers thus reducing cost for hardware. The key idea is that network devices now only implements basic primitives and constantly talk with the Domain Controller to push statistics and pull routing information. The protocol utilized to push and set rules into the “dumb” switches is called OpenFlow.

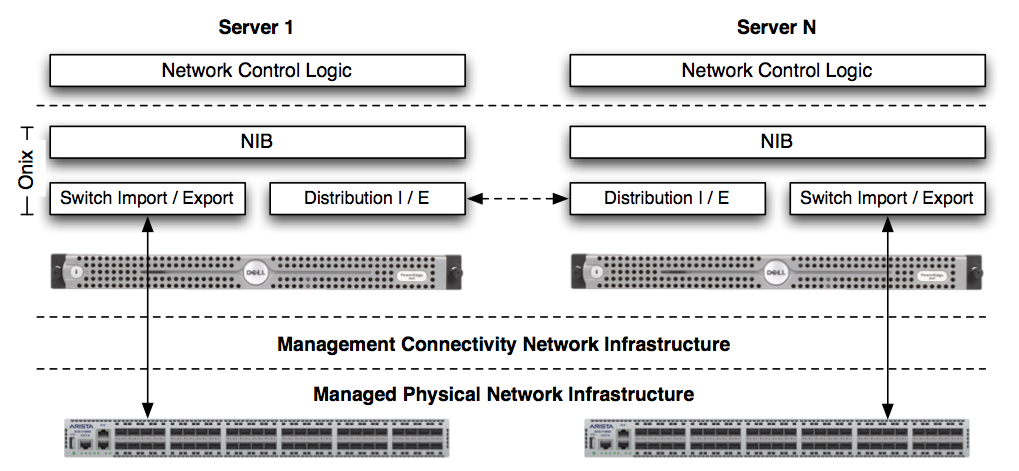

In the paper [1], jumping back into the 2010, ONIX is explained as a control platform exposing simple API and introducing a Network Information Base (NIB) similar to the RIB. There are four basic components in ONIX: the physical infrastructure (switches and routers), connectivity infrastructure (used as a management network), Onix distributed system (single instance / cluster) and the Control logic (implemented on top of API) and failures can happen on each one of these parts. It is built on NOX and distributed among multiple servers. The API allow read/write data object state into/from the NIB which is the main focus point of the system.

The NIB is the complete network graph containing each device, link, routes and attributes all put into Entities.

Onix allows easy scaling through Partitioning and Aggregation, replicating the controller, making switches only contact a subset of them and making each controller manage only a subset of the whole NIB.

When there is a change in the NIB, it will translate the change into an OpenFlow message and then push to the affected device. For this, import/export modules sit behind the NIB waiting for polls or changes.

Another feature of ONIX is the possibility to store data in two different ways, through a SQL database for stable states and to a DHT for dynamic states.

Contributions

ONIX doesn’t introduce anything totally new, in fact it derives some work from Ethane, SANE, RCP and NOX. But those did not address reliability and flexibility as ONIX does but instead presented the idea of a physically separated control plane.

Indeed among the top contributions of ONIX, we can see the use of general APIs and flexible distribution primitives which allow designers to work on a pre-tested and established base and implement control application with all the flexibility they need.

APIs allow read/write state to any element in the network which corresponds to data-objects in the NIB. Since switches have narrower requirements along with a limited quantity of RAM and CPU, most of the work can be done on platforms that don’t have such limitations such as the Domain Controller.

Here ONIX presents two way of storing state information: a persistent SQL transactional database for slowly changing state and a memory-only DHT for dynamic and mostly inconsistent state. ONIX allow developers to choose their own trade-off, for example: more consistency and durability or more efficiency.

How to implement SDN?

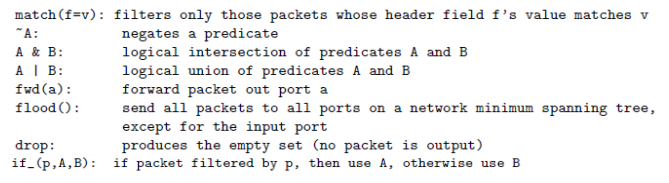

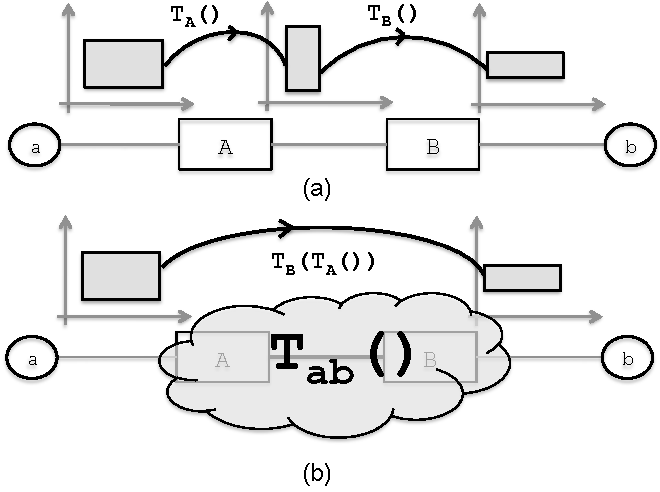

SDNs application development does not offer great flexibility in terms of modularity which is instead the focus of the paper [2]. It introduces a new language and abstractions for building modular applications on top of SDNs. Pyretic allows packets to be processed sequentially or concurrently, thus producing different results for different use cases. It gives developers great building blocks and high-level policy functions with extreme shortness of code and readability. Physical switches can now be virtually merged into single devices (many-to-one) or instead single physical switches can be logically divided into multiple virtual switch handling different modules (one-to-many).

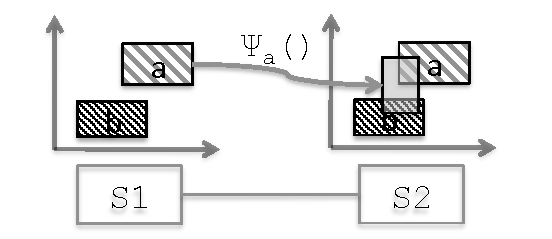

Monitoring, Firewall and Load balancing examples are shown in the paper which use different features of the language like static policy language (called NetCore) which includes primitive actions (drop, flood, passthrough ..), predicates (act on subset of packets), policies and queries. In Pyretic, a policy receives a packet as input and returns a multiset of localized packets as output and packets in order to be processed need to be “lifted” from physical switches to the virtual instances. This up/down process is achieved with the use of virtual tags and headers specifying input/output ports and switches name. This allows developers to better abstract a derived network from the underlying one. Finally the high level of abstraction provides an elegant mechanism to implement different networks topologies and writing self-contained modules.

Contributions

Software Defined Networking has limited support for modular components design and programming, in fact the paper target is to show and explain a new way of composing SDNs with multiple components. Modularity is the key, it allows developers to not implement monolithic applications with API (like in ONIX) and just focus on high level policies. The paper presents two way of handling packets, a sequential composition and a parallel composition. It also proposes “Network object” which constrain the modules and allow information hiding and protection.

The Pyretic Language shown here is a step further in the development of SDNs with the concept of modular and extensible programming. Here the packets are represented as dictionaries, and the addition of virtual headers is made simple and allows fine-grained modularity and policy development.

Other control platform such NOX used to offer low-level interfaces, but composition isolation and virtualization are plus added by Pyretic and programmers do not need to resolve conflicts by hand.

Pyretic is able to build sophisticated controller applications for large networks with the use of a simple language as Python. Details about Pyretic here [3]

How to test and debug SDN applications?

Two papers go through the testing and debugging of SDN applications:

- “Header Space Analysis: Static Checking For Networks”

- “A NICE Way to Test OpenFlow Applications”

Header Space Analysis

In the old times network devices were very simple with just the role of forwarding packets, today there is a large number of protocols, encapsulation methods and policies to keep in mind. This makes debugging problems very hard and long to resolve and that is one reason why the paper [4] exposes Header Space Analysis framework for a static analysis of production networks.

The key idea is that the framework is protocol agnostic, allowing to test different environment with different header formats and syntax without reinventing the wheel. Hassel doesn’t care about the semantic, it just matches series of {0,1} in the space L of the packet headers, since the data part has been proven to be irrelevant for the calculations. With the use of transfer functions, the framework is able to map, simulate and test the network for forwarding loops (finite and infinite) and configuration errors.

The framework geometrically models network boxes and applications like firewall and NAT with transfer functions which operates through the use of algebra primitive operations (intersection, union, complementation and difference). Loops can be detected injecting packet in the network and looking if the same packet come back to the injection port. Static analysis can be applied for reachability checking to verify if packets can logically reach a destination and if a destination can possibly receive packet from a determined source using the range inverse computation.

Hassel is implemented in Python 2.6 along with a parser for Cisco IOS configuration output that automatically generate transfer functions and model of the router. The implementation also employs optimizations that allowed a 19x to 400x speed increment.

Tests on the Stanford network showed that the tool has been of great usefulness in detecting loops and configuration mistakes.

Contributions

The idea of transfer functions used by Hassel is very similar to the ASE Mapping in axiomatic routing. Here instead, Hassel does not try to understand protocols and packet headers, it treats everything like a point {0,1} in space large L, being L the header length. In fact Hassel introduces different new terms to define a domain for the analysis like Header and Network Space along with the transfer functions. The Transfer functions “move” packets from one port to another, “transforming” the packet at each step.

Another important feature is the possibility to slice the network in different part (like MPLS, VLANs and FlowVisor), and test each one for security isolation and leaks. This is done by detecting if a possible function output of a domain ends up in another un-allowed domain, for example if a packet from one VLAN can pass to another VLAN while processing and rewriting its headers.

A great contribution of Hassel is the fact that it made it easier to spot network violations with network transfer function in a static and non-risky way. In fact the implementation was proven to find real mistakes in the Stanford network in a considerable short time.

A NICE Way to Test OpenFlow Applications

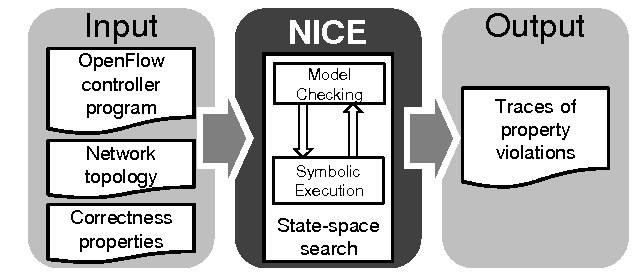

With the increasing adoption of SDN technology, asynchronous and distributed systems become difficult to test for certain class of bugs. This is where NICE or No bugs In Controller Execution tries to fill the gap [5].

NICE is a tool for testing unmodified controller programs on the NOX platform. It makes use of two important techniques: model checking and symbolic execution.

In order to test an app, it is needed to model the system acquiring the possible states which could be infinite. Model checking gather all the possible states, but being the output too large, symbolic execution is coupled to test only the relevant code paths. Model checking explore system states and model the execution with components, channels, components states and transitions. It define “class of packets” and chooses a “representative packet” to be the tester for that specific state case, defining this way different domain specific search strategies.

NICE sends packet at different events interleaving, simulating as close as possible the real world execution scenario. Controller programs are viewed as set of event handlers that create transitions (changes in global var settings). Symbolic execution instead does not model the system space but rather focuses on identifying relevant inputs but it’s still not sufficient “per se”.

The combination of the two is what allows NICE to scale and uncover tricky bugs in potentially interminable possible states. If a transition violates a certain correctness property (these are configurable), than NICE records the state and log the error.

Contributions

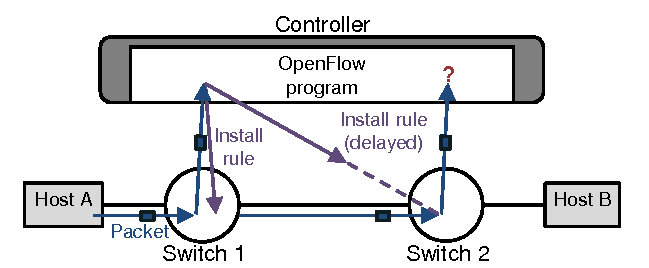

A significant problem in SDN applications is the delay on rule install across different switches which cause unintended behavior and performance degradation. Challenges that NICE try to solve are in the range of large space of inputs like switches states, input packets and event ordering.

An important contribution of NICE is its ability to generate streams of packets depending on controller state in order to test the program and uncover forwarding loops and black holes. Its important to note that NICE relieves work from the developer, asking only to provide network topology and hosts.

NICE couples symbolic execution with model checking in order to lower the number of states and focus on the ones that can create meaningful transitions. This allow to test applications without worrying about the huge system state space scalability problems. Furthermore NICE provides a set of correctness properties with their own testing implementation which allows developers to quickly test and build custom modules.

In fact NICE has proven to find different class of bugs in standard unmodified controller programs.

How to scale SDN applications at large?

OpenFlow imposes excessive overheads on the controller when it comes to high performance networks, thing that introduces unacceptable latencies and restricts system performances. The visibility over all the flows is simply un-achievable in High Performance networks. Openflow is inherently not scalable and its design has two steps that involve the controller and create too much heavy load on it: flows setup and statistic gathering.

The paper exposes DevoFlow [6], which is a slightly modified version of OpenFlow which aims to overcome such disadvantages. In fact it gives back to routers the forwarding decisions on most flows. Paper shows an analysis of trade-offs between centralized management and costs, and shows DevoFlows new mechanisms. Its goal is to keep as many flow entry in data plane as possible (passing on control plane is expensive), while always maintaining enough visibility over the network.

Central control gives a lot of good points like: near optimal traffic management, simplified policy development and simplicity and future-proof of switches but it creates overhead both in hardware resources, communication load and delay and latency which become the bottleneck.

Devoflow introduces rule cloning and local actions which aims to relieves TCAM usage and controller overload. Also, multipath is supported with little difference from ECMP, here the paths are not constrained to be of the same cost to be chosen.

Finally evaluations shows that DevoFlow can improve throughput of 32% with Clos and 55% with HyperX networks which is a great increase .

Contributions

The important takeaway from DevoFlow is the fact to give back to routers routing decision on most of the flows called microflows while only the significant ones, the elephant flows are managed by the controller.

Paper shows pro and cons of traditional OpenFlow centralized management and compares with DevoFlow devolving control and statistic collection. Here its important to point out the huge difference in bandwidth between line card speed and ASICs-CPUs which is therefore limited to 17Mbps compared to 300Gbps of the line card.

Stats gathering and flow setup compete for the limited bandwidth. DevoFlows introduces rule cloning in order to augment action of wildcard rules, saving them to the data plane thus relieving TCAM memory. Hedera consider 5 secs to be the good pull time but for HPN it must be less than 500ms. Maestro multi-thread controller can install rules twice as fast than NOX but DevoFlow introduces different statistic collection mechanisms like sampling, triggers and approximation counters.

Ultimately DevoFlows uses oblivious routing which distributes fairly path probability in correlation of the link bandwidth, for example two links A (10Gbps) and B (1Gbps) will have different probability of being chosen, 10/11 for A and 1/11 for B, which is the exact perfect balancing.

In fact it was showed to achieve “94% of the throughput that dynamic routing does on the worst case”.

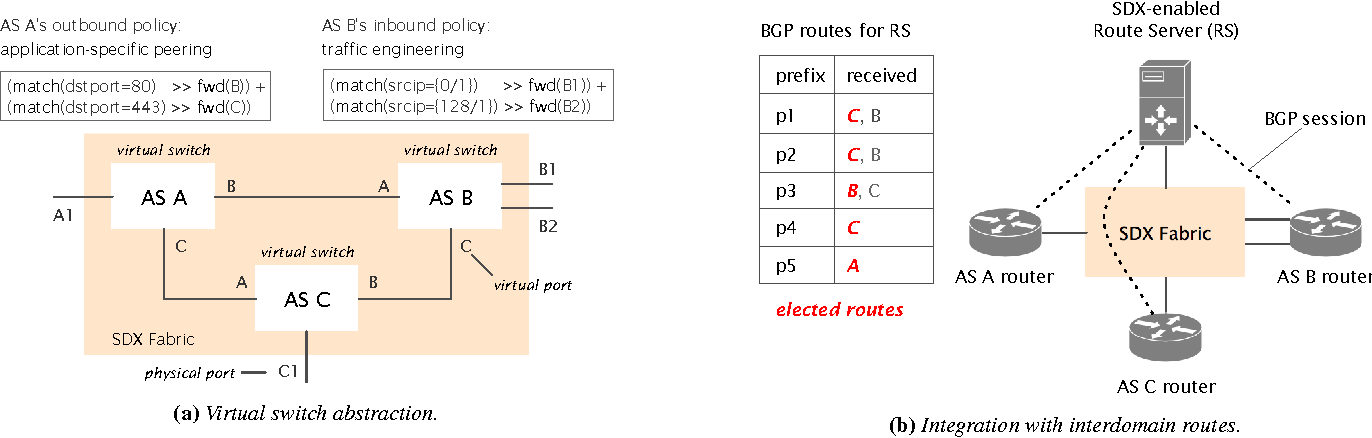

The SDX - Software Defined Internet Exchange

One of the reason this paper is trying to address is the limited way of todays network of being capable of forwarding packets. In fact, it is based on destination IP prefixes which does not offer flexibility and functionalities like a more useful matching towards header fields or BGP attributes.

Software defined Internet Exchange (SDX) [7] is a good solution to the problem, allowing peers to run SDN application, ensuring system scalability and avoiding BGP routes conflicts. Indeed paper addresses major problems of BGP like the one mentioned, a difficult and indirect way of express policies (AS path, local pref, etc) and the impossibility to have more control over end-end flows instead of just neighbors.

Furthermore the approach must be scalable, deployable and providing a good programming abstraction. SDX also provide TE inbound support, at the central location AS can install rules and control traffic according to IP/port.

Anycast is supported for load balancing and SDX can also redirect flows to middleboxes when possible DOS are detected. Pyretic is the language used to write policies, and SDX works by gathering them from ASs, augmenting and then translating into flow rules. The process is multi-stage and involves a route server which actually calculate best path for each prefix and re-advertise the routes. Augmenting policies involves an explosion in size, but it can be largely reduced by grouping prefixes into FEC and tagging different type of traffic directly on Border Routers.

Contributions

The main goal of SDX is to provide each participant (physical or remote) the illusion of a SDN switch which simplifies policies creation and management in multiple ways.

The paper also identifies class of applications that can be deployed one of which is “Application specific peering” that allows ASs to exchange traffic only for a subset of application classes. ex (only youtube traffic) and SDX easily integrate this by installing rules for group of flows.

Importantly SDX allow ASs isolation while aggregating participants policies so that each one can only act on its own traffic. SDX also ensure consistency among BGP routes with the use of filters and no loops can be created.

The paper also points out that prefixes tend to be stable , BGP routes only affects small part of forwarding table and BGP route changes occur in bursts and are followed by long periods. These aspects have greatly helped to better think and improve the policy compilation processes.

Here link to PART 2

References

- [1]T. Koponen, M. Casado, and N. Gude, “Onix: A distributed control platform for large-scale production networks,” Proceedings of the 9th USENIX Symposium on Operating Systems Design and Implementation, Jan. 2010.

- [2]C. Monsanto, J. Reich, N. Foster, J. Rexford, and D. Walker, “Composing Software Defined Networks,” in 10th USENIX Symposium on Networked Systems Design and Implementation (NSDI 13), Lombard, IL, 2013, pp. 1–13 [Online]. Available at: https://www.usenix.org/conference/nsdi13/technical-sessions/presentation/monsanto

- [3]J. Reich, C. Monsanto, N. Foster, J. Rexford, and D. Walker, “Modular SDN programming with pyretic,” USENIX Login, vol. 38, pp. 128–134, Jan. 2013.

- [4]P. Kazemian, G. Varghese, and N. McKeown, “Header Space Analysis: Static Checking for Networks,” in Presented as part of the 9th USENIX Symposium on Networked Systems Design and Implementation (NSDI 12), San Jose, CA, 2012, pp. 113–126 [Online]. Available at: https://www.usenix.org/conference/nsdi12/technical-sessions/presentation/kazemian

- [5]M. Canini, D. Venzano, P. Peresini, D. Kostic, and J. Rexford, “A NICE Way to Test OpenFlow Applications,” in Presented as part of the 9th USENIX Symposium on Networked Systems Design and Implementation (NSDI 12), San Jose, CA, 2012, pp. 127–140 [Online]. Available at: https://www.usenix.org/conference/nsdi12/technical-sessions/presentation/canini

- [6]A. R. Curtis, J. Mogul, J. Tourrilhes, P. Yalagandula, P. Sharma, and S. Banerjee, “DevoFlow: Scaling Flow Management for High-Performance Networks,” in Proceedings of the ACM SIGCOMM 2011 Conference, SIGCOMM’11, 2011, vol. 41, pp. 254–265, doi: 10.1145/2018436.2018466.

- [7]A. Gupta et al., “SDX: A Software Defined Internet Exchange,” in Proceedings of the 2014 ACM Conference on SIGCOMM, New York, NY, USA, 2014, pp. 551–562, doi: 10.1145/2619239.2626300 [Online]. Available at: http://doi.acm.org/10.1145/2619239.2626300